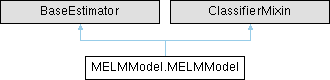

Multi-layer Extreme Learning Machine (MELM) model.

MELMModel is a multi-layer variant of Extreme Learning Machine (ELM) model,

consisting of multiple layers, each with its own computational units.

Parameters:

-----------

layers (list, optional): List of layers. Defaults to None.

verbose (int, optional): Verbosity level. Defaults to 0.

verbose (int): Verbosity level.

Controls the amount of information printed during model fitting and prediction.

Attributes:

-----------

classes_ (array-like): The classes labels.

Initialized to None and populated during fitting.

Examples:

-----------

Create a Multilayer ELM model

>>> model = MELMModel()

Add 3 layers of neurons

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=2000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

Define a cross-validation strategy

>>> cv = RepeatedKFold(n_splits=10, n_repeats=50)

Perform cross-validation to evaluate the model performance

>>> scores = cross_val_score(model, X, y, cv=cv, scoring='accuracy', error_score='raise')

Print the mean accuracy score obtained from cross-validation

>>> print(np.mean(scores))

Fit the ELM model to the entire dataset

>>> model.fit(X, y)

Save the trained model to a file

>>> model.save("Saved Models/MELM_Model.h5")

Load the saved model from the file

>>> model = model.load("Saved Models/MELM_Model.h5")

Evaluate the accuracy of the model on the training data

>>> acc = accuracy_score(model.predict(X), y)

| MELMModel.MELMModel.fit |

( |

| self, |

|

|

| x, |

|

|

| y ) |

Fit the MELM model to input-output pairs.

This method trains the MELM model by iteratively fitting each layer in the model.

During the training process, input data is propagated through each layer.

Args:

-----------

x (array-like): Input data.

y (array-like): Output data.

Examples:

-----------

Create a Multilayer ELM model

>>> model = MELMModel()

Add 3 layers of neurons

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=2000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

Fit the ELM model to the entire dataset

>>> model.fit(X, y)

| MELMModel.MELMModel.predict |

( |

| self, |

|

|

| x ) |

Predict the output for input data.

This method predicts the output for the given input data by propagating

it through the layers of the MELM model.

Args:

-----------

x (array-like): Input data.

Returns:

-----------

array-like: Predicted output.

Examples:

-----------

Create a Multilayer ELM model

>>> model = MELMModel()

Add 3 layers of neurons

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=2000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

Fit the ELM model to the entire dataset

>>> model.fit(X, y)

Evaluate the accuracy of the model on the training data

>>> acc = accuracy_score(model.predict(X), y)

| MELMModel.MELMModel.predict_proba |

( |

| self, |

|

|

| x ) |

Predict class probabilities for input data.

This method predicts class probabilities for the given input data

by propagating it through the layers of the MELM model and applying softmax.

Args:

-----------

x (array-like): Input data.

Returns:

-----------

array-like: Predicted class probabilities.

Examples:

-----------

Create a Multilayer ELM model

>>> model = MELMModel()

Add 3 layers of neurons

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=2000, activation='mish', C=10))

>>> model.add(ELMLayer(number_neurons=1000, activation='mish', C=10))

Fit the ELM model to the entire dataset

>>> model.fit(X, y)

Evaluate the prediction probability of the model on the training data

>>> pred_proba = model.predict_proba(X)